Let’s dive deeper and take a look at the architectural constructs which make it possible for the Cohesity platform to consolidate and converge all the secondary storage workflows such as backup, file shares, test&dev, and analytics into a fully-distributed scale out hyperconverged platform.

The core premise of the design takes a fresh and holistic look at secondary storage for enterprise companies of all sizes.

Now I will introduce you to the following concepts a Partition, a ViewBox and a View. All these constructs are created under the hierarchy of a cluster.

A Partition

A Partition defines a collection of physical Nodes assigned to a logical unit and assigned a name. Data is stored on the nodes assigned to the partition. Partitions can map to a logical characteristic of your organization such as, sales department could have some nodes belonging to partition 1, and marketing department could have nodes belonging to partition 2. Furthermore, nodes can be assigned to partitions based on workloads for each partition, for example if you had 12 nodes in total, you could have a partition of 6 nodes for backing up VMware virtualization, 3 nodes dedicated for Oracle to backup databases using RMAN, and the last 3 nodes defined specifically to run file shares, which can be used for home directories or such to support VDI workloads.

You could see how powerful having these partitions could be for Service Providers who could create 3 different partitions for Premier, Elite and Standard customers. SLAs could be tied to each of these partitions and customers could pay for services respectively.

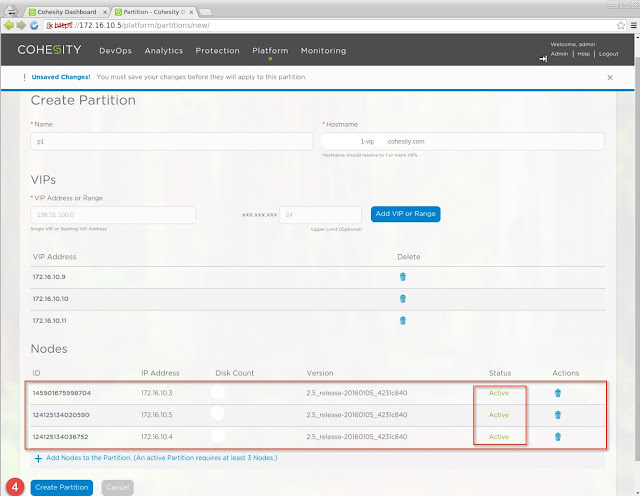

Here is how you create a partition using the Cohesity UI.

- Provide a name to the partition - in this example I am naming it p1.

- Provide VIP addresses to the partition - VIPs are used for resiliency and load balancing. VIPs also enable non-disruptive upgrades for the Cohesity platform.

- Add nodes to the partition - At least 3 nodes are required per partition.

Once the nodes are assigned to the partition, you can hit "Create Partition" and save this configuration.

The partition is now created and the 3 respective nodes have been assigned the VIPs. If the underlying hardware were to fail or experience any outage, the VIPs associated to that node would float to another node in the partition and NFS/SMB clients would be unaffected by the physical outage. There would be no stale file handles or interruption experienced by the client systems, and operations would continue normally.

View Box

A View Box is a named storage location on a Partition. A View Box defines the storage policy for deduplication and when the deduplication process occurs, i.e inline or post-process. When the backup job is configured we specify the View Box to save that job and depending on the storage policies defined the Cohesity snapshots are stored in that particular View Box. Service providers could also choose to define customers per viewbox as all storage policies are applied on the viewbox level.

- Select the partition from the drop down - in this case there is only 1 partition.

- Click on "Add a View Box" the create the view box entity.

- Provide the name for the View Box - I called it vb1 for this example and select the deduplication storage policies required.

In addition View boxes also contain NFS/SMB exports, also known as Views, which can be mounted on client operating systems such as Windows, Linux, Unix and Macintosh.

View

A view is synonymous to a NFS datastore or a SMB share. Views are used to store data. When a View is created manually as you would see in the steps below, this view can be mounted directly on a ESX as a NFS datastore, it can also be mounted on a Linux/Unix host as a distributed NFS mount point, and the same View can also be mounted by a Windows system as a SMB mount. — A View is essentially a storage volume that can be exposed as a filesystem via NFS/SMB.

The View is added to the vb1 View Box. In this example, I’m going to show how you can override the above defined QOS policy on the View level, if required.

In the image below I will create a View called smb-test and make it ready to be mounted on a Windows system for SMB testing.

Now that the View has been created, you can use the \\server\folder\ path and mount it from a windows client system. As seen in the example below, the same filesystem can be exposed using the NFS protocol to Unix/Linux systems.

Once the view has been created, further you have the option to create unlimited writable clones of this entire filesystem. These writeable clones are similar in essence to snapshot'ing the entire View (NFS/SMB filesystem) which is being presented as a file share. One can go back to any point in time to an older snapshot of the View to see the older versions of files in the View.

Once the View boxes are created and respective storage policies have been defined, you are ready to add a source (vCenter IP and credentials) to start protecting all the VMs in that source. When a backup job is created, it creates its own default View for itself and uses it as the target for the backup job.

See the Protect your VMs blog to learn more about the Policy Engine and associating jobs to the defined policies.

In conclusion, one can see that the architectural choices made to create the Cohesity platform makes designing large distributed system clusters easier because now larger fault domains can be managed under a single cluster with increased overall resiliency.

Thanks to @datacenterdude for proof reading this blog.

References for further reading — Secondary Storage

Scott D. Lowe, vExpert, MVP Hyper-V, MCSE, Site Editor

Chris Mellor covers storage and allied technology areas for The Register.

http://www.theregister.co.uk/2015/10/21/oasis_provides_water_for_dehydrated_secondary_storage_users/